Generative search systems select, synthesize, and present brand information, not retrieve ranked pages. That single shift changes what visibility means for brands.

Many brands assume visibility disappears when rankings drop. In reality, visibility disappears when a brand is not selected or understood by AI systems generating answers. Ranking can still exist while representation does not.

Multiple industry studies now confirm this gap. Even brands that rank in Google’s top results have only a limited probability of appearing in AI-generated answers, showing that AI systems do not simply reuse traditional search rankings.

In traditional search, visibility depends on placement in a list of links. In generative search, visibility depends on whether a brand becomes part of the answer itself. AI systems evaluate entities, assess source reliability, and assemble responses based on contextual consistency, not keyword position.

This changes how brand reputation works. Reputation is no longer shaped only by owned content or media coverage. It is shaped by how consistently a brand appears across trusted sources, and how clearly its expertise can be verified by machines over time.

This is not a SEO problem. Rather it is a brand visibility and reputation problem created by generative systems. That distinction explains why some brands lose influence without losing traffic, while others gain relevance without gaining clicks.

To address this shift, we need a different definition of visibility, one that reflects how AI systems select, trust, and represent brands.

What Is AI Brand Visibility?

AI brand visibility refers to the likelihood that a brand is selected, trusted, and represented accurately when AI systems generate answers.

In analyzing AI-generated answers, three characteristics define AI brand visibility.

1. AI visibility is entity-centric

AI systems reason about brands as entities, not pages. They look for clear signals about what a brand does, where it is relevant, and how consistently that information appears across credible sources. One well-optimized page rarely changes this outcome on its own.

2. AI visibility is probabilistic

Language models do not retrieve a single “best” page. They weigh relevance and reliability across many passages and sources, a behavior repeatedly described in technical explanations from OpenAI. Visibility increases when a brand appears repeatedly and coherently across contexts the model considers relevant.

3. AI visibility is representational

AI visibility is representational, not transactional. When AI systems generate answers, they do not simply surface sources. They summarize, paraphrase, and contextualize information, deciding how a brand is described, not just whether it is mentioned.

From can be seen in real AI responses, this creates a new visibility risk.

“A brand can be present, yet represented inaccurately or incompletely. Another brand can be absent entirely, even if it performs well in traditional search,” says Discovery Lab

Visibility exists on a spectrum defined by accuracy, context, and framing.

Industry analysis from Search Engine Land repeatedly highlights this shift. In generative search, brand mentions and citations function more like reputation signals than traffic drivers.

A simple example makes this tangible.

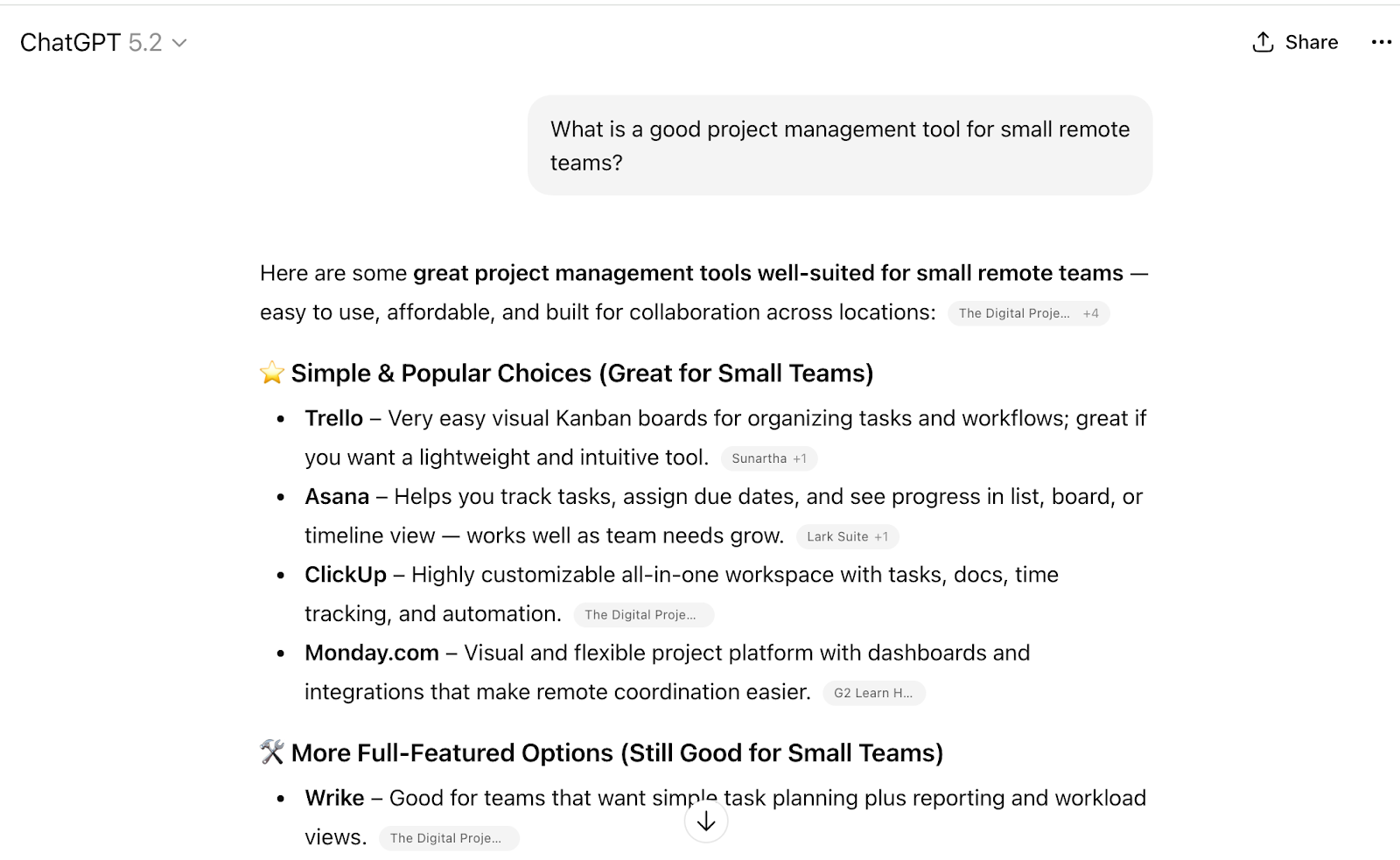

Ask an AI assistant a question like “What is a good project management tool for small remote teams?” The response usually does not include a ranked list of websites. Instead, the AI mentions a few tools and briefly explains what each one is known for.

Those short explanations matter. They become the brand’s default description inside the AI system.

For example, one tool may be described as “simple and affordable,” another as “best for enterprise workflows,” and another as “popular but complex.” These labels are not pulled from a single page. They are synthesized from how each brand appears across documentation, reviews, articles, and third-party discussions.

If a brand is consistently described across credible sources, the AI’s summary feels accurate. If the information is fragmented, outdated, or contradictory, the AI still produces an answer, but the brand’s positioning becomes vague or misleading.

How Generative Search Changes Brand Reputation

Generative search turns brand reputation into a computed outcome rather than a message a brand controls directly, a shift consistently reflected in AI-generated answers.

1. Reputation is inferred, not declared

AI systems do not take brand claims at face value. They infer reputation from patterns across the web, how a brand is described, where it appears, and how consistent those descriptions are across credible sources.

This reflects how modern search systems prioritize synthesized understanding over individual pages, as described in guidance from Google Search Central. Large language models extend this by generating answers based on their internal interpretation of consensus, not brand messaging.

2. Third-party sources shape the narrative

In generative search, reputation is built outside a brand’s own website. Reviews, media coverage, forums, and historical references all influence how AI systems describe a brand.

3. Inconsistency weakens trust signals

AI systems reward clarity and agreement. When brand descriptions conflict across sources, AI still produces an answer, but trust signals weaken. The result is vague, outdated, or incomplete representation.

Why this matters

A brand can maintain rankings and traffic while losing narrative control in AI-generated answers.

Learn more: Why AI Visibility Will Redefine Brand Reputation

How AI Decides Which Brands to Show

AI systems decide which brands to show by expanding a single question into many sub-queries, retrieving passages across the web, and synthesizing answers from the most relevant and reliable signals, not by reusing Google’s top rankings.

This decision process consistently follows four patterns.

1. AI expands one question into many queries (query fan-out)

Generative search systems rarely evaluate a query as-is. They break one prompt into multiple related queries, retrieve passages for each, and then combine the results.

This process often called query fan-out, explains why AI answers include sources that never ranked for the original keyword. Google has publicly described this shift toward synthesis and multi-step understanding in its AI search documentation.

2. AI retrieves passages, not “the best page”

AI systems operate at the passage level, not the page level. They pull small, relevant sections from many documents and weigh them together before generating a response.

This behavior aligns with retrieval-augmented generation (RAG), where models retrieve multiple relevant passages and synthesize an answer instead of selecting a single source.

3. Ranking highly in Google does not guarantee AI visibility

One of the most persistent misconceptions is that strong SEO rankings translate directly into AI visibility. The data does not support that assumption.

Multiple studies discussed by SparkToro and iPullRank show that ranking in Google’s top 10 results gives brands only about a ~25% chance of appearing in AI-generated answers, with overlap ranges typically falling between ~19% and ~39%.

This gap exists because AI systems evaluate relevance across many fan-out queries and passages, not a single SERP.

4. Relevance and consistency outweigh classic SEO signals

Research referenced by Michael King founder of iPullRank shows that traditional ranking factors explain only a small portion of AI citations, while topical relevance, clarity, freshness, and consistency correlate much more strongly.

In practice, AI selection works like a probability system. Each relevant, coherent passage a brand owns increases its chances of being included. One isolated page rarely moves the needle.

AI visibility is probabilistic, shaped by repeated and coherent relevance across contexts rather than single rankings.

This is why AI visibility behaves less like SEO performance and more like coverage, clarity, and reputation at scale.

Trust, Citation, and Authority in AI Search

AI systems trust and cite brands based on consistency, verifiability, and third-party confirmation, not on self-promotion or rankings.

Here’s how trust, citation, and authority actually work in AI search.

1. Trust comes from consistency, not claims

AI systems do not evaluate intent. They evaluate patterns. When a brand’s description, expertise, and positioning remain consistent across credible sources, trust signals strengthen. When those signals conflict, trust weakens even if the brand ranks well.

This mirrors how modern search systems define quality and reliability, as outlined in Google’s guidance on helpful, people-first content.

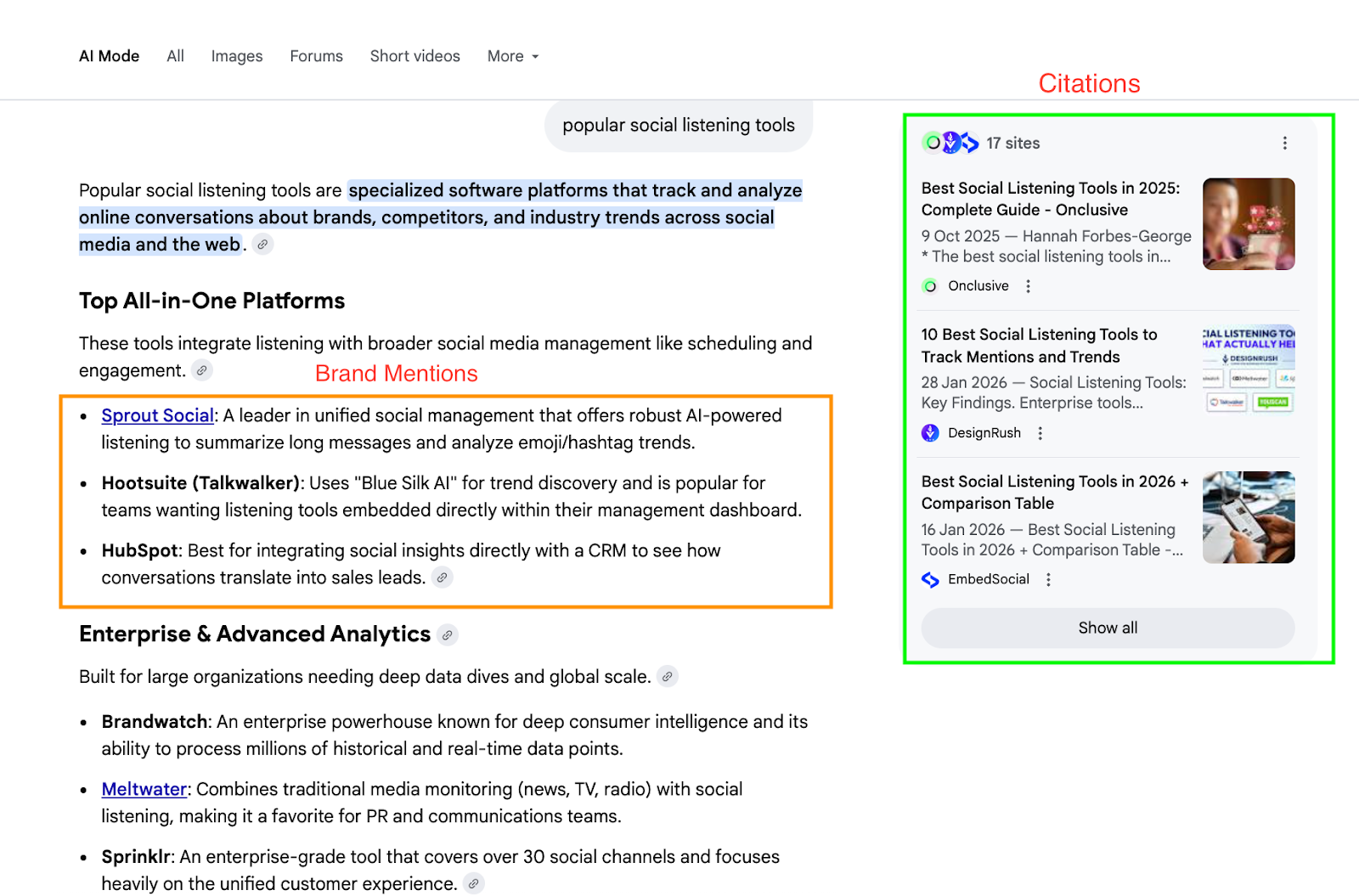

2. Citation signals authority, not popularity

Being mentioned is not the same as being cited. AI systems treat citations as stronger authority signals because they indicate that a source contributed meaningfully to the answer.

Industry analysis shows that AI answers favor sources that explain, contextualize, and clarify topics, not those that merely repeat keywords or brand names.

3. Third-party validation matters more than owned content

AI systems rely heavily on external consensus. Reviews, media coverage, documentation, forums, and expert commentary often outweigh what a brand says about itself.

Trust emerges when multiple independent sources reinforce the same narrative, a principle long established in trust research.

4. Authority is contextual, not global

AI systems do not treat authority as universal. A brand can be trusted in one context and ignored in another. Authority depends on topic alignment, not brand size.

This reflects how language models retrieve and synthesize information across topic-specific passages, rather than deferring to a single “authoritative” domain.

Dig deeper: Visibility in the Age of AI: Why Being Selected, Cited, and Trusted Matters

The New Role of Content, PR, and Communications

In generative search, content, PR, and communications shape AI trust signals together, not as separate channels, and not as optimization tactics.

Across AI-generated answers, teams that treat these functions as one system are far more likely to be cited and represented accurately.

Here’s how their roles change.

1. Content defines context, not just keywords

Content no longer exists only to rank or convert. It exists to clarify what a brand is about, where it is relevant, and how it should be described.

AI systems rely on clear, well-structured explanations to understand topics and entities. This aligns with Google’s guidance that prioritizes helpful, people-first content designed to explain, not manipulate.

2. PR reinforces trust through third-party validation

PR now functions as a machine-readable trust layer. Media coverage, expert commentary, and credible mentions help AI systems verify that a brand’s claims are supported elsewhere.

In generative search, third-party references often carry more weight than owned content because they signal external agreement, not self-promotion.

This mirrors long-standing trust research showing that credibility increases when information is reinforced by independent sources.

This pattern is already visible in real AI citation behavior. In our white paper on AI Visibility of Indonesian Banks 2025, nearly 70% of all citations in AI-generated answers came from earned media and editorial news outlets, while owned media primarily served to provide product details and clarifications.

This shows that in the age of generative search, earned media is no longer just a PR outcome it has become a foundational trust signal for AI systems shaping brand reputation.

Read the full white paper here to explore the complete findings and strategic implications.

3. Communications aligns narratives across the ecosystem

AI systems penalize inconsistency. When messaging varies across websites, press coverage, documentation, and community discussions, AI still generates answers, but trust signals weaken.

Communications teams play a critical role in aligning how a brand is described everywhere, reducing contradictions that confuse both humans and machines.

Industry commentary highlights that AI visibility depends less on aggressive optimization and more on narrative consistency across channels.

4. None of this works in isolation

Content without PR lacks external validation. PR without clear content lacks context. Communications without coordination creates fragmentation.

Generative systems do not reward individual tactics. They reward coherent systems of signals that make a brand easy to understand and easy to verify.

This is why AI visibility cannot be “owned” by SEO alone. It requires collaboration across content, PR, and communications.

Measuring AI Visibility Beyond SEO Metrics

AI visibility is measured by how accurately and consistently a brand appears in AI-generated answers, not by rankings, traffic, or clicks.

Brands get stuck here because they keep using SEO dashboards to measure a system that no longer behaves like search.

Quick summary table (practical reference)

What you measure | SEO metric | AI visibility signal |

Presence | Rankings | Frequency of inclusion |

Accuracy | Page optimization | Representation accuracy |

Authority | Backlinks | Citations in AI answers |

Consistency | Keyword focus | Cross-source agreement |

Here are four practical ways to measure AI visibility, with concrete examples.

1. Measure representation accuracy (what AI says about you)

The first question to ask is simple:

Does the AI describe the brand correctly?

example:

Ask ChatGPT, Gemini, or Perplexity:

“What does [Brand X] do?”

Then review:

- Is the description accurate?

- Is the positioning current?

- Is the use case clear or vague?

If the AI describes your brand using outdated language or incorrect positioning, visibility exists, but reputation is degraded.

This aligns with how generative systems synthesize understanding rather than retrieve pages, as described in Google’s documentation on AI-generated search features.

2. Track frequency of inclusion, not rank position

AI visibility is probabilistic, not deterministic. One query is not enough.

example:

Run the same intent-based question 10–20 times with slight variations:

- “best tools for remote teams”

- “software for managing remote projects”

- “project management tools for small distributed teams”

Then track:

- How often your brand appears

- In which contexts

- Compared to competitors

This approach mirrors how rank tracking works directionally, even though results vary, an idea long accepted in SEO measurement.

3. Identify citation vs mention patterns

Being mentioned is weaker than being cited as a source. example:

In AI answers, look for:

- Direct references (“according to…”, “based on…”)

- Brand explanations vs passing mentions

If competitors are repeatedly cited while your brand is only mentioned, or absent entirely that gap signals weaker authority.

This reflects broader trust and credibility research showing that sourced information carries more weight than unsupported claims.

4. Compare AI visibility against SEO stability

One of the clearest signals is divergence.

example:

- SEO rankings remain stable

- Organic traffic holds steady

- But AI answers stop mentioning the brand

This usually indicates:

- Fragmented third-party narratives

- Declining relevance in AI-evaluated contexts

- Strong SEO, weak consensus

Generative systems do not reuse rankings wholesale, which explains why SEO stability does not guarantee AI inclusion.

Visibility Is Now About Being Understood

AI visibility is not about ranking higher. It is about being understood well enough to be represented accurately.

Generative search selects brands based on clarity, consistency, and trust—not rankings alone. AI systems decide which brands to include and how to describe them, turning visibility into a reputation outcome rather than a traffic metric.

If AI systems are already speaking on your behalf, the real question is simple: do they understand your brand well enough to get it right?